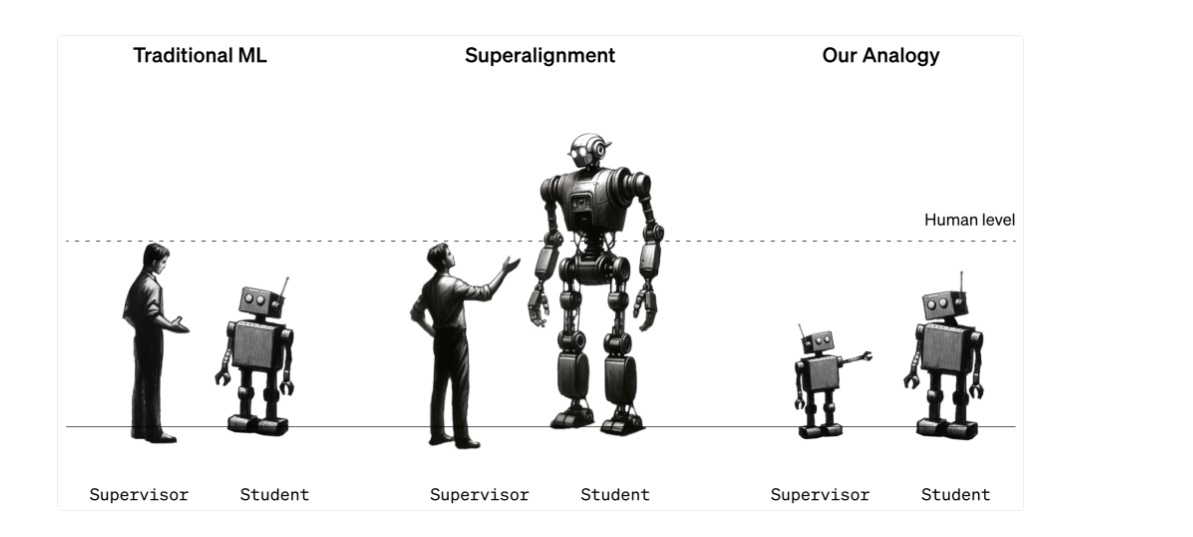

MIT Technology Review by Will Douglas Heaven — OpenAI has announced the first results from its superalignment team, the firm’s in-house initiative dedicated to preventing a superintelligence—a hypothetical future computer that can outsmart humans—from going rogue. Unlike many of the company’s announcements, this heralds no big breakthrough. In a low-key research paper, the team describes a technique that lets a less powerful large language model supervise a more powerful one—and suggests that this might be a small step toward figuring out how humans might supervise superhuman machines. Less than a month after OpenAI was rocked by a crisis when its CEO, Sam Altman, was fired by its oversight board (in an apparent coup led by chief scientist Ilya Sutskever) and then reinstated three days later, the message is clear: it’s back to business as usual. Yet OpenAI’s business is not usual. Many researchers still question whether machines will ever match human intelligence, let alone outmatch it. OpenAI’s team takes machines’ eventual superiority as given. “AI progress in the last few years has been just extraordinarily rapid,” says Leopold Aschenbrenner, a researcher on the superalignment team. “We’ve been crushing all the benchmarks, and that progress is continuing unabated.”

For Aschenbrenner and others at the company, models with human-like abilities are just around the corner. “But it won’t stop there,” he says. “We’re going to have superhuman models, models that are much smarter than us. And that presents fundamental new technical challenges.” In July, Sutskever and fellow OpenAI scientist Jan Leike set up the superalignment team to address those challenges. “I’m doing it for my own self-interest,” Sutskever told MIT Technology Review in September. “It’s obviously important that any superintelligence anyone builds does not go rogue. Obviously.” Amid speculation that Altman was fired for playing fast and loose with his company’s approach to AI safety, Sutskever’s superalignment team loomed behind the headlines. Many have been waiting to see exactly what it has been up to.

Dos and don’ts